You are not looking at a sheet of cookies or ceramic tiles, these are a group of tablets from the Anatolian Civilizations Museum in Ankara, Turkey. They come from an ancient city called Kanesh, in Kültepe Turkey, in the region of Cappadocia, where more than 20k tablets have been recovered (so far) which date between 1930-1730 B.C. Each of these tablets record the names, places, and notable objects of a world once forgotten to history, but one that we can now restore and preserve through digital tools and methods. But before diving into the digital world of these objects, let’s talk briefly about why these objects are in need of protection and digital preservation.

Why build a multi-modal Linked Data Model for Cuneiform Languages?

There are numerous reasons why building a linked data model will be helpful to many different parties. First there’s the scholars (aka. Assyriologists and archaeologists) and their students, who are not many in number, but who have a keen interest in preserving the cuneiform data in order to better understand these ancient civilizations. Second, there’s the local communities who are living near or on top of this material culture, and while they may not fully know or appreciate the information these objects provide, they know it has a significant value. Third are the authorities who are concerned with world heritage preservation and have recognized these tablets as artifacts which are at risk of being illegally looted and traded on the black market, and who are in desperate need of assistance to identify such objects when they happen to be in transit. Fourth, are the museums and institutions which house these objects, sometimes with very little information about their provenance or original context. Fifth, are the computational specialists, whether in computer science and machine learning or computational linguistics and natural language processing or just interested engineers and programmers, who are often intrigued by the idea of these objects and their inscriptions in under-supported languages and see the opportunity for developing computational tools to support scholars in the decipherment and translation of these ancient languages. These individuals also play a significant role in the creation and maintenance of open source tools and frameworks to analyze the wealth of data that result from such computational workflows. Lastly, there’s the general public, who find these remnants of antiquity intriguing but are otherwise almost completely cut-off from the true knowledge and value which these cultures have provided scholars and may continue to provide to all of humanity. For these parties, and any others not in this list, the move toward a linked open data project will provide greater access to the whole of the cuneiform documentation, and allow the ongoing collaboration of these parties to take place in a secure, open source environment for all the world to discover.

What most people don’t know is that there are so many of these tablets. The estimated number (in Streck 2010) lies around a half-million. However, we currently only have digital records for about 350,000 cuneiform tablets, with metadata represented online in a number of databases (e.g. CDLI, ORACC, BDTNS). Perhaps only half of these have good 2D photos available online, and even fewer have digital text with transcriptions or translations accompanying their museum catalog data. That means there are still 150,000 which have been accounted for in some form, but are not yet available online in any open database. Some of these are no doubt “unpublished” tablets, which could be known to scholars and museums, but are kept from the public until a proper edition (transliteration and translation) can be provided in print. There’s an alarming number of tablets which have fallen into this category, and they are kept from open databases largely out of a lack of access to scholarly resources for text editions in their housing institutions.

To add to this challenge of digitization, it is important to keep in mind that such tablets are still coming out of the ground. Each season there are official excavations (and unofficial looting) taking place, and the results of these efforts are often objects and artifacts with inscriptions in cuneiform writing. They may be given to museums or end up on the market in some form, or they may never reach the public if they end up in the hands of private collectors.

Ancient Tablets as “Big Business”?

While the illicit excavation and looting of these ancient sites has been ongoing from as early as history can record, it has definitely become a form of “big business” in the region since the 90s, with rogue groups like ISIS hard at work trying to discover the ancient sites in their region, in order to loot any artifacts as a funding source. The site pictured in slide 6 with pot-marks like craters on the moon, is known as ancient Isin (Ishan Bahriyat), an important city-state already by the early second millennium BCE, and not far from where the Hobby Lobby Collection was most likely looted. The site is first known to us through a small number of archives dating to 1900 B.C.E. One such group of texts comes from the Šinkaššid Palace in Uruk about 45 miles southeast of Isin, and records a high degree of inter-regional trade and treaties among the existing and emerging city-states, which regularly manifested their cultural prowess and prestige through diplomatic gift-giving, thereby producing thousands of objects made of silver, gold, lapis lazuli, carnelian, and other precious materials. No doubt, for this reason, it is often the palace which is found looted when archaeologists are able to examine a looted site. If such documents are assumed to record inventories of the palace, then the conditions are ideal for a treasure hunt of epic proportion, especially when all around there is apparently nothing but desert for miles and miles.

All the the excavation sites where cuneiform artifacts have been found (embedded search to zoom in) QueryService

Evidence of the scale of such looting cn be seen from the ledgers of ISIS leaders, some of which emerged after the Abu Sayyaf Raid on May 15, 2015, which was “a raid by American special forces on an ISIS safe house in a small village outside Deir ez-Zor in Syria killed ISIS leader Fathi Ben Awn Ben Jildi Murad al-Tunisi, better known by his nickname Abu Sayyaf, freed an 18-year old Yazidi woman, and captured a trove of documents. Some of the documents captured during the raid were declassified several months later and have already been discussed in detail …, illustrating the inner workings of ISIS’ Diwan al-Rikaz or Department of Natural Resources. The documents showed that ISIS had systematized archaeological looting, with departments dedicated to the research, discovery and exploitation of new archaeological sites and a permit system to authorize diggers.” The recovered documents “showed that ISIS classified antiquities as a natural resource alongside oil and minerals, as something to be extracted from the ground rather than as looted items or spoils of war. Most important, the raid captured a receipt book detailing the khums tax levied on authorized antiquities diggers in ISIS’ Wilayah al-Kheir (largely coterminous with Syria’s Deir ez-Zor governate, with some territory in Iraq). The book contained eight receipts, of which showed that in the period from November 2014 to May 2015 Abu Sayyaf had collected $265,000 in taxes on looted antiquities, which multiplied by the 20% tax rate showed that the value of looted antiquities was around $1.25 million. However, these receipts were but a snapshot and could not show how much money ISIS had made in total from antiquities, or what percentage of their revenue was derived from antiquities looting.” (Jones 2016) It is clear to all specialists in this area that ISIS has taken the looting of cultural heritage to a new, more systematic level of destruction. Unfortunately, this type of looting has only escalated since the Gulf War in the 90s, which means that we continue to encounter looting happening on an industrial scale, often with evidence of large backhoe buckets having scooped the ancient remains of palaces, houses and neighborhoods out of the ground.

Among these looted sites was a Sumerian city, known as Drehem (ancient Puzriš-Dagan, featured in slide 7) which was initially looted in the 90s. Over time, the cuneiform tablets, bullae, cylinder seals, and other material from the site emerged on the antiquities market, and photographs were collected as they appeared on the internet. Fortunately, select scholars collected the photos and made transcriptions of the texts and published collections both in print and digitally in the online database of Neo-Sumerian texts (known as BDTNS). Thanks to the careful attention to details in the tablets, with very little archaeological context, these scholars have been able to assign about 15,000 texts to the provenance of Drehem in the cuneiform databases online.

Due to recent high-profile scandals, the general public may already be familiar with the present state of affairs involving the collections of artifacts from the ancient Near East. These images (in slide 8) were taken after the US Dept. of Homeland Security seized a large collection of artifacts procured illegally by the president of Hobby Lobby, Steve Green. (NPR reported May 2018: https://www.npr.org/sections/thetwo-way/2018/05/01/607582135/hobby-lobbys-smuggled-artifacts-will-be-returned-to-iraq)

The Department of Justice reported (July 2017) that: “The Oklahoma-based chain of retail stores bought more than 5,500 objects from dealers in the United Arab Emirates and Israel in 2010… The purchase was made months after the company was advised by an “expert on cultural property law” [who] had warned Hobby Lobby that artifacts from Iraq, including cuneiform tablets and cylinder seals, could be stolen from archaeological sites. The expert also told the company to search its collections for objects of Iraqi origin and make sure that those materials were properly identified.” This is no doubt referring to the collection that the Greens have been engaged in for many years now, for their personal Museum of the Bible. “But despite that warning Hobby Lobby arranged to purchase thousands of antiquities — including cuneiform tablets and bricks, clay bullae and cylinder seals — for $1.6 million. Some artifacts from the UAE bore shipping labels that falsely described them as “ceramic tiles” or “clay tiles (sample)” originating in Turkey. Other items were sent from Israel with a false declaration that they were from there.”

Of course, with this accusation comes a formal statement from the late Robert E. Cooley, former director of the Hobby Lobby’s Museum of the Bible: “We maintain our standards at the museum. And if any collection, be it the Green family or any other family that donates, does not meet the standards that we require, then we do not take that collection” (https://www.washingtonpost.com/news/acts-of-faith/wp/2017/07/06/hobby-lobbys-3-million-smuggling-case-casts-a-cloud-over-the-museum-of-the-bible/?noredirect=on&utm_term=.7526d2f92347).

It is often the unspoken work of museum curators who take it upon themselves to provide important information to the leaders and authorities of such institutions, who then have the choice of pursuing an equitable path of repatriation. Thanks to open source databases like FactGrid, the methods for digital curation of these ancient artifacts can now be extended to an international audience (for more on digital curation, see Anderson 2022).

The knowledge of the historical precedent of these artifacts, coupled with an urgency for preserving cultural heritage at-risk, creates a strong impetus to enlist computational specialists in the effort of digital curation.

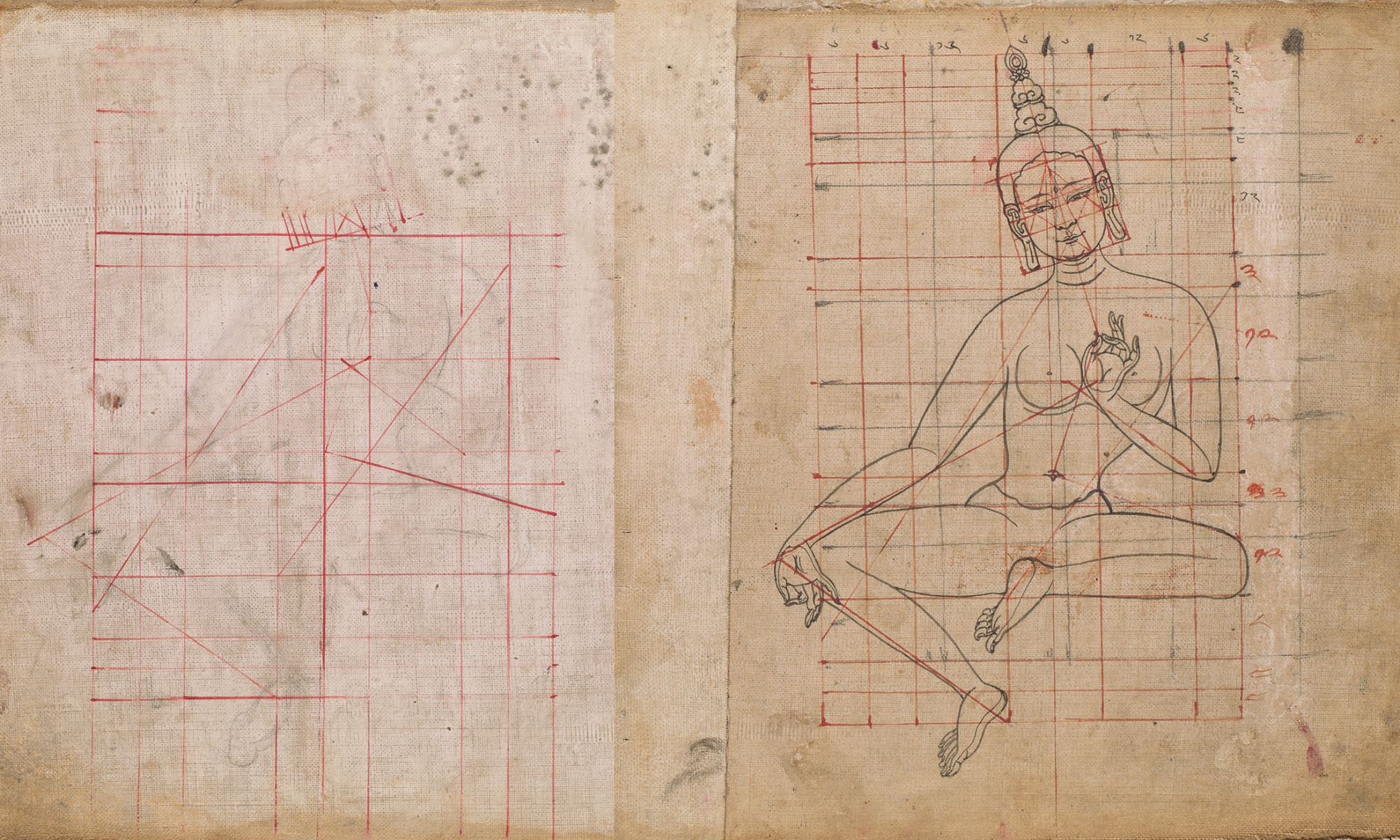

That said, cuneiform data is inherently multi-dimensional (e.g., slide 9), and therefore can be very challenging for the scholarly community to create accurate digital records. From the tablet measurements, to the signs impressed into the curved clay surface. There have been significant advancements made in the tech industry which can provide new avenues into these dimensions, including 3D scanned images of cuneiform tablets. A research team from Heidelberg and Mainz, Germany, directed by Hubert Mara, has developed software that will recognize the depth of each wedge indentation in the clay, and the wedge pairs that make up a sign. They have expressed interest in partnering with museums to digitize their collections, or share their tools for optical character recognition (OCR). (see Bartosz, Mara & Homburg)

3D technology has enabled anyone with a smartphone to make a digital replica of an object, which is ideal for working with cuneiform tablets. With writing on all 6 sides of each tablet, traditional photos often obscured the text transmission process. This new development in visualization has incredible potential for linking all forms of data in the production of a “digital twin”. Each artifact that contains writing has a wealth of relational data, which scholars can use to reconstruct the archaeological context for tablets and other objects with contemporary micro-stratigraphy, and this is true whether they are found in situ or come to us through looting and the antiquities markets.

There are also a number of implementations of network analysis in order to visualize the aggregated named entities of thousands of related tablets, along with linking linguistic features to the tablets with 2D and 3D image segmentation for machine learning and the development of machine translations. In the example illustrated in slide 17, there are three personal names written on a tablet which we can visualize in a network. This small group forms what demographers call a ‘cohort’ of related persons, and allows us to make certain assumptions regarding their temporal, and geographical proximity. We can then use these cohort groups, along with any other identifying data to merge instances of named entities on a series of tablets into a node representing a single entity. The primary challenge in such a process is the lack of any ‘gold standard test’, meaning that we can’t go and ask these people if they were, in fact, the ones mentioned on these groups of ancient documents. Therefore, scholarly collaboration and verification of the data is very important, but until recently this form of digital collaboration has been very difficult to pursue.

Linked Open Data has provided an attractive solution to both the need for securing data in a version-controlled platform (with Wikibase) as well as providing global access to the data with the highest standard that LOD can provide. This pragmatic focus on the usability of the data is very important for machine learning tools and methods to develop in support of these languages. It is unreasonable to expect such specialists to spend countless hours simply obtaining the data from the multiple databases that house the information for the same objects. By providing a hub where any and all aspects of these “digital twins” can be accessed and added upon, we will open up this cultural heritage for more holistic computational modelling and analysis with reproducibility and replicability from the start.

Because Wikidata employs both stable URIs through Linked Open Data (LOD) ontologies as well as git-based version control for any editor, it has become the gold standard database for the semantic web. These practices have enabled researchers to build projects which can easily be edited by collaborators around the world, and in any language. The FactGrid system allows for the use of an extensive list of data types and their relational properties, and perhaps more importantly, their framework allows for the creation of new properties and item types.

By taking the example of a cuneiform tablet, we can see (in slide 23) how Wikidata already possesses the numerous forms of data and metadata which scholars use to query and identify each tablet in space and time, and list the people, places, commodities and events inscribed on the document. Each of these entities can link to additional features in LOD triple statements, for example, a personal name can be linked to the family name, role, profession, and activity described with that entity, along with any other entities mentioned in a text. Each tablet can also be given statements for an accompanying seal inscription, along with the historical period (in its many formats), and any bibliographic data which may relate other scholarship (e.g., book and journal publications) to that particular tablet.

Using a graph database design, it is possible to provide persistent identifiers for both the data point and the relations between each data point (these two parameters for a triple statement). This makes each datapoint more discoverable in allowing for ‘fuzzy’ semantic searches, where one may not know what the exact location of their datapoint may be in a database, but they have enough contextual information to find what they are looking for. In this structure, data fall within multiple fields, or are linked to all possible fields (over time). To illustrate this, we could take a cuneiform tablet, which is a unique object. This object may have many different identifiers as it has been indexed in different databases over time. Anyone who wanted to learn more about that tablet would have to know where to go to find the data and metadata. In a relational database structure, one would have to use exact searches to find a certain tablet. In a graph database structure, using triple statements, the same tablet could be given a URI and that could then be linked to each database which has indexed it, along with any other information for that object using triple statements. These novel approaches work together to culminate in a new standard, referred to as Linked Open Data, which has been thoroughly described in Wikidata’s ontology (see also Wikipedia entry for Linked Data).

Stability and Versioning

Git-based repositories for code have proven to be the ‘best practice’ for releasing code in development, while still allowing for reproducibility. This is true because they automatically include version control, along with the dates and IDs of those who made the changes. These repos can be more inclusive and open, allowing for all contributors of a project to be documented on the repository by name. This is important not only for proper accreditation, but also for sustainability, since someone wishing to replicate the research may need to get in contact with someone on the team. Git-based open source repositories for software and code development e.g. GitHub, GitLab, Kaggle, have quickly become the academic standard for most scientific studies (i.e. those using code or reproducible methods). Websites like GitHub or GitLab, use version control to work collaboratively while building pipelines for work that is ongoing and iterative, but which also needs to be reproducible and replicable.

The flexibility of these new digital methods creates many opportunities and presents us with additional challenges, as they are currently in development and will likely continue to develop over the course of many years. The sense that a research project may never be “finished” should encourage project directors to use these more stable and reproducible methods when they design and apportion the development of a research project among their team. The CDLI (GSoC) is a good example of this type of workflow:

“At the CDLI, we are versioning text and now catalogue entries and we could extend this system to all entities, including named entities. We will definitely be versioning narrative entries concerning all words (including named entities) Older versions are not as accessible but other projects (eg. in classics) are leading in that avenue and I am looking up to them for improvement in the future.

When doing a study, it is important to provide a frozen version of the data and of the research processing code so other researchers can either try to replicate the results, or use a similar dataset to see the outcomes with the same method applied. At CDLI we provide daily dumps of all our data that can be linked to on Github and a monthly release with a DOI through Zenodo. A similar workflow can be applied with any dataset. Stability also comes from sustainability: it is important to think about long term archival of datasets.” (Émilie Pagé-Perron, personal correspondence)

With so many interested and invested parties, it’s difficult to find a one-size-fits-all solution to this multi-dimensional data, which is why a linked data solution is much more attractive. By using a Wikibase in FactGrid to store LOD triples, we can let scholars and museums continue to keep their records in the platforms they prefer, while still linking these data for greater accessibility and more holistic computational work. So our goal is not to constrain scholars to use an unknown platform, but rather to let them continue curating these objects in the catalogs they are currently using, and then to aggregate this data into a Wikibase, where anyone around the world can have access to these datasets with greater discoverability. In this way we work on building up the data pipelines and APIs for pushing and pulling these datasets between their existing websites into the FactGrid Cuneiform project (see slide 26), and when desired, we can even push the triple statement IDs back to these sites as well, in order to create greater transparency around the existing URIs.

Thanks to a tight social network, there are already many scholars who are eager to help in the process, so our project is well on its way. We began by laying the literal groundwork for this project thanks to a partnership with GLoW, CDLI and ORACC to begin with, who all provided the geographical data for the provenience of the tablets with geo-coordinates for over 600 known locations where cuneiform tablets have been discovered (see slides 13-14) . Subsequent discussions around the important features in prosopographical research have led to a series of helpful guidelines for our project (see Waerzeggers, et al. forthcoming).

Our next steps will be to add the relevant authority files from the catalog entries of the existing open databases for cuneiform, and then provide URIs for each tablet, along with the lines of text for each tablet, and the entities named on each tablet. From there we can get into the properties for the different entities mentioned on a tablet and their corresponding attributes, such as patronymics, professions, roles, activities, dossiers and archives. When there are dates recorded on the tablet, we will link those to a given ruler, as regnal years were the common form of dating throughout this time. Through this process we hope to have triples for each person, place, and thing mentioned on each tablet.

If you’re still wondering why go to all this trouble to provide labels for all the proper nouns, the impetus lies in a desire for a less ambiguous prosopography, which in turn will allow scholars and all parties involved to learn much more about the ancient world, as seen from the perspective of individuals over the course of their lives, along with their geographical movements and the social impact on the existing markets of the day.

Lastly, we are also motivated by the hope of what could happen when we open this data up to the wider scientific community. Recently we’ve seen the latest methods used by archaeologists and engineers in image technology, such as XRF and CT scanning, which can provide new insights into the composition and elemental characteristics of these objects. There will no doubt be many more tools and methods to come as science continues to provide access in “see-through” technologies. There is certainly great pioneering potential for new discoveries to occur, which is why it is so important to lay the groundwork for keeping all these different data linked together with the highest standard of data available, Linked Open Data. Thanks to FactGrid this will be made possible.